AI in Third Party Risk Management

Explore AI in third party risk management, its advantages, and the corresponding solutions to common challenges. Learn its steps and see if you can implement it in your organization.

Published 25 Sept 2025

Article by

7 min read

What is AI in Third Party Risk Management?

AI in Third-Party Risk Management (TPRM) refers to the integration of artificial intelligence technologies in assessing, monitoring, and mitigating risks associated with third-party vendors, suppliers, and partners. By analyzing vast datasets in real-time, AI tools can identify red flags and patterns indicative of financial instability, compliance risks, cybersecurity vulnerabilities, and operational inefficiencies within third-party ecosystems. This proactive approach allows businesses to respond promptly to emerging threats, thereby minimizing the impact of third-party risks on their operations and reputation.

Advantages

As organizations increasingly rely on external parties for various functions, AI-driven solutions provide enhanced risk management by providing visibility and insights into the potential risks these relationships pose.

Aside from this, AI in third-party risk management helps businesses enhance efficiency, accuracy, and security in managing third parties. Diving deep into the benefits, here are just some of the many advantages of leveraging AI in TPRM:

Real-time risk monitoring – AI enables continuous, real-time monitoring of third-party vendors, allowing organizations to detect and respond to emerging risks immediately. By analyzing live data feeds, AI systems can flag unusual activities or deviations from compliance, helping businesses stay agile in mitigating potential threats and reducing reaction times.

Enhanced risk prediction and prevention – With advanced machine learning models, AI can predict potential risks by identifying patterns and anomalies that traditional methods may miss. These predictive capabilities help organizations proactively address risks before they escalate, safeguarding operations from disruptions caused by third-party issues and thereby improving organizational resilience .

Increased efficiency and cost savings – AI automates repetitive tasks in third-party risk management , such as data collection and initial risk assessments , reducing the manual workload on risk management teams. This not only saves time and costs but also allows teams to focus on higher-value risk analysis and decision-making, contributing to improved operational efficiency .

Improved accuracy and risk scoring – AI-driven algorithms can analyze vast and complex datasets with a high degree of precision, producing more accurate and reliable vendor performance assessments . By minimizing human error and eliminating bias, AI ensures consistent and objective evaluations of third-party risks, which improves decision-making and reduces the likelihood of oversight.

Enhanced compliance and regulatory adherence – AI tools can automatically track regulatory changes and monitor third-party compliance with up-to-date legal and industry standards . This proactive approach to compliance helps organizations avoid penalties, maintain a strong reputation , and stay ahead of evolving regulations , ultimately supporting a more secure and responsible business environment.

Stay Ahead of Third-Party Risks

Identify and manage vendor risks to protect your business from disruptions and ensure compliance with centralized third-party risk management.

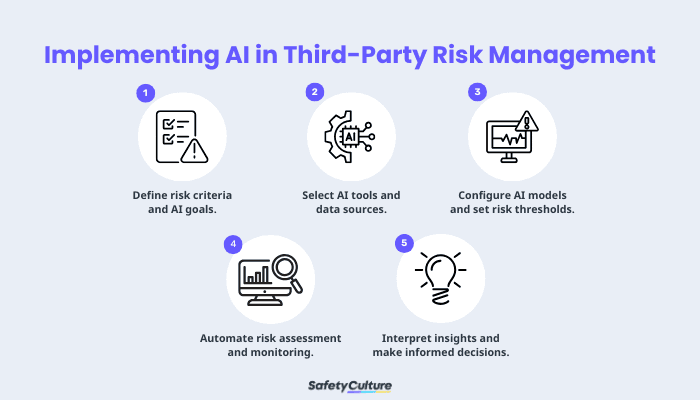

How to Implement AI in Third Party Risk Management

By following these steps and best practices, organizations can implement AI in third- party risk management to improve visibility, efficiency, and resilience in managing third-party relationships. Here’s the step-by-step guide:

How to Implement AI in Third-Party Risk Management

Step 1: Define risk criteria and AI goals.

Establish specific risk criteria that align with the organization’s objectives and compliance needs. Identify the goals of using AI in third-party risk management, such as real-time monitoring,predictive analytics, or automation of risk assessments.

Best Practices :

Ensure AI objectives match the organization’s operational risk tolerance and industry-specific risk factors.

Use industry benchmarks to set Key Risk Indicators (KRIs) for better AI-based risk assessments.

Involve cross-functional teams (legal, compliance, IT) to align AI applications with broad organizational goals.

Step 2: Select AI tools and data sources.

Choose AI-powered tools for data collection and risk analysis. Select reliable internal and external data sources, including financial data, regulatory records, and news sources, to ensure comprehensive risk assessments.

Best Practices :

Use only accurate and updated data to enhance the accuracy of AI-driven insights.

Select AI solutions that scale with the organization’s evolving third-party network.

Integrate real-time data feeds to keep risk profiles up-to-date and enable timely interventions.

Step 3: Configure AI models and set risk thresholds.

Adjust AI models to meet the defined risk criteria and establish risk thresholds that trigger alerts. Configure machine learning algorithms to prioritize risks by severity, frequency, or potential impact on the organization.

Best Practices :

Periodically fine-tune or calibrate AI models to improve their ability to detect emerging risks and reduce false positives.

Use adaptive thresholds based on real-time insights to adjust as risks evolve.

Implement feedback mechanisms to improve the AI’s accuracy and adjust risk sensitivity over time.

Step 4: Automate risk assessment and monitoring.

Implement AI-driven automation for ongoing monitoring of third-party risks. Use AI to conduct periodic assessments, issue alerts, and provide insights on third-party-related issues.

Best Practices :

Automate repetitive assessments and other high-frequency tasks to free up resources for strategic risk management activities.

Set up real-time alerts to notify stakeholders immediately of significant changes in third-party risk status.

Ensure AI-based monitoring tools integrate with current risk management and compliance systems to create a unified TPRM process.

Step 5: Interpret insights and make informed decisions.

Use AI-generated insights to identify trends, make data-backed decisions, and implement risk mitigation strategies. Evaluate risk and incident reports regularly to spot patterns and adjust third-party relationships or oversight as needed.

Best Practices :

Educate teams on interpreting AI-driven reports and making informed decisions based on risk analytics.

Use AI-generated risk scenarios to test potential responses to identified risks.

Share insights with relevant teams to coordinate a unified response to identified third-party risks.

Challenges and Solutions of AI in TPRM

Implementing AI in third-party risk management comes with several challenges that organizations must address to ensure successful deployment. Here are the key challenges and effective solutions to help navigate them:

Data Quality and Accessibility

One of the primary challenges in using AI in third-party risk management is ensuring the quality, completeness, and accessibility of data. AI models rely on accurate and comprehensive data from third-party sources to generate reliable insights. Incomplete or outdated data can lead to false positives, missed risks, and suboptimal decision-making.

Solution :

Establish strict data governance practices that emphasize data accuracy, timeliness, and completeness. Collaborate with trusted data providers and utilize automated data validation tools to monitor and maintain high-quality datasets for AI models.

Regulatory Compliance and Ethical Considerations

AI-driven TPRM solutions must comply with regulations around data privacy,cybersecurity, and ethical AI usage. This can be challenging as privacy laws, such as the General Data Protection Regulation (GDPR), restrict certain data collection and sharing types, potentially impacting AI’s capabilities.

Solution:

Work closely with legal and compliance teams to ensure AI models meet regulatory requirements. Implement ethical AI practices, such as anonymizing sensitive data and conducting regular compliance audits, to safeguard privacy and ensure AI algorithms remain within legal and ethical boundaries.

Integration with Existing Systems

Integrating AI-powered TPRM tools with existing risk management, compliance, and operational systems can be complex and resource-intensive. System incompatibility can hinder seamless AI adoption, resulting in a lack of unified risk insights.

Solution :

Select only AI solutions compatible with your organization’s existing infrastructure and support API-based integration. Consider using scalable AI platforms that allow for gradual adoption and customization to enhance compatibility with current systems.

High Implementation Costs and Resource Requirements

Deploying AI in third-party risk management can require significant investments in software, infrastructure, and specialized talent. Organizations may face budget constraints and resource limitations, especially when establishing AI capabilities from scratch.

Solution :

Start with small-scale AI implementations that address specific high-priority areas within TPRM. Focus on cost-effective, cloud-based AI solutions that offer scalable options, allowing the organization to expand AI capabilities as budget and resources grow.

Complexity in Interpreting AI Insights

AI-generated insights can be complex and require specialized knowledge for accurate interpretation. This can be a challenge for teams unfamiliar with AI analytics, potentially leading to misinterpretation of risk data.

Solution :

Provide training programs to help risk management teams understand AI analytics and interpret insights effectively. Foster cross-departmental collaboration, enabling AI specialists to work closely with risk management teams and promote accurate data interpretation.

Implement AI in Third Party Risk Management with SafetyCulture

Why Use SafetyCulture?

Why Use SafetyCulture?

SafetyCulture is a mobile-first operations platform adopted across industries such as manufacturing, mining, construction, retail, and hospitality. It’s designed to equip leaders and working teams with the knowledge and tools to do their best work—to the safest and highest standard.

Enable your team to evaluate all potential risks from third parties, implement effective risk mitigation strategies, and coordinate responses to relevant issues seamlessly. Centralize performance metrics monitoring and gain comprehensive insights into the overall management, all within a single platform.

✓ Save time and reduce costs ✓ Stay on top of risks and incidents ✓ Boost productivity and efficiency ✓ Enhance communication and collaboration ✓ Discover improvement opportunities ✓ Make data-driven business decisions

Related articles

Environmental Safety

Safety

Oil Drum Storage: Safety Guide and Best Practices

Learn the practices for safe oil drum storage, its importance, and the regulations for compliance.

Environmental Safety

Safety

Flood Risk Management

Read this guide to flood risk management, its importance, and the key components and strategies for this process.

Environmental Safety

Safety

A Comprehensive Guide to Stormwater Pollution Prevention Best Management Practices (SWPPP BMP)

Learn about the types of best management practices for SWPP and the steps to effectively implement them in prevention plans.